The entire archive of the BBC World Service is being made available online. Tristan Ferne and Mark Flashman, BBC Research & Development, look at some of the challenges involved.

About the authors:

Tristan Ferne, Lead Producer, Internet Research & Future Services, BBC Research & Development. Tristan works for a team at BBC R&D where they use technology and design to prototype the future of media and the web. He is the lead producer in the team and has over 15 years experience in web, media and broadcast R&D. Originally an engineer, now a digital producer, he has helped develop many influential prototypes and concepts for the BBC's Future Media division.

E-mail: tristan.ferne@bbc.co.uk

Twitter: @tristanf

Tristan Ferne, Lead Producer, Internet Research & Future Services, BBC Research & Development. Tristan works for a team at BBC R&D where they use technology and design to prototype the future of media and the web. He is the lead producer in the team and has over 15 years experience in web, media and broadcast R&D. Originally an engineer, now a digital producer, he has helped develop many influential prototypes and concepts for the BBC's Future Media division.

E-mail: tristan.ferne@bbc.co.uk

Twitter: @tristanf

Mark Flashman, BBC Internet Research and Future Services. Mark has worked in various roles at the BBC including Operations Manager at the World Service where he played a leading part in the digitisation of broadcast operations, music reporting, and channel management. He played a significant role in the introduction and development of the first digital programme archive at the BBC, and researched and also wrote SEO (Search Engine Optimisation) strategies for the BBC’s foreign language teams and. He has recently moved to the BBC’s Internet Research and Future Services team where he is exploring ways to unlock archives using automatically generated and crowd-sourced metadata.

E-mail: mark.flashman@bbc.co.uk

Web: www.bbc.co.uk/rd

Mark Flashman, BBC Internet Research and Future Services. Mark has worked in various roles at the BBC including Operations Manager at the World Service where he played a leading part in the digitisation of broadcast operations, music reporting, and channel management. He played a significant role in the introduction and development of the first digital programme archive at the BBC, and researched and also wrote SEO (Search Engine Optimisation) strategies for the BBC’s foreign language teams and. He has recently moved to the BBC’s Internet Research and Future Services team where he is exploring ways to unlock archives using automatically generated and crowd-sourced metadata.

E-mail: mark.flashman@bbc.co.uk

Web: www.bbc.co.uk/rd

Introduction Between 2005 and 2008 the BBC World Service, with much foresight, digitised the contents of its Recorded Programme library. This included what was archived from the English-language radio services over the past 45 years - over 50,000 programmes covering a wide range of subjects from weekly African news reviews covering the events in Sierra Leone as they happened to interviews with Stephen Spielberg. The BBC's Research & Development department is experimenting with putting this radio archive online for anyone to listen to, but also to help improve it.

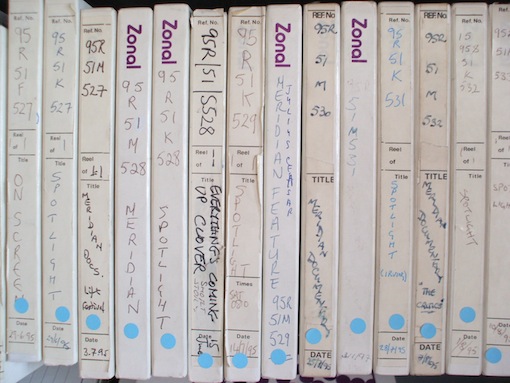

Archiving and digitising In 2005 the shelves of the physical programme archive in Bush House, home to the BBC World Service since 1941, were filling up and the building was to be vacated in a few years time. Something had to be done.

The archive consisted of around 50,000 recorded programmes kept on ¼” tape, DAT and audio CD in the Recorded Programme library. The digitisation project recorded these in real time onto servers as high quality digital audio files, a process taking three years. One of the final parts of the digitisation project was to add any recordings found on shelves in peoples' offices as they moved out of Bush House. As shelves were emptied, piles of CDs in lever-arch files were found and another 7000 programmes were added. All of these digitised audio files were then automatically linked to the BBC’s in-house information and archives system, Infax, and a simple website was built for BBC staff to search for, preview or download the programmes.

Interestingly, this process led to a significant number of 'ghost' programmes in the archive database, ones that appeared in the records but had no recorded audio. There appears to be no single cause for this but we do know that before the advent of digital media and production fewer programmes were archived. The cost of media was a factor and at one point the World Service even had a tape reclamation unit that recycled tape from unused and unwanted spools, wiped it and then stuck it back together for use by others. Programmes were borrowed from the library and never returned, some simply never made it to the archive in the first place and some programmes had been scheduled but outside events like the first Gulf War had intervened and the programmes never broadcast.

The digitisation project was a great success. It made the move to new premises in Broadcasting House much easier and now the archiving process is much simpler, consistent and automated. However, although we had all the programmes digitised as high quality audio the descriptive metadata we had was of limited quality and quantity. Metadata is what describes digital items, without which nothing can be found. So although we might have a programme title and broadcast date we didn't really know what each programme was about - without listening to it - or indeed the shape and contents of the whole archive.

Putting it online Typically, when it has come to publishing archive content online to its audience, the BBC has focused on specific topics or programmes and then put editorial effort into selecting, describing and publishing the programmes. In the last few years for example, BBC Radio has released archives online covering the complete Desert Island Discs and Alistair Cooke's Letters From America. But this approach didn't seem practical for an archive of this size - it would be too time-consuming and expensive - so in 2012 the World Service and engineers from BBC Research & Development joined up to run an experiment to demonstrate a way to put massive media archives online using a combination of computers and people. We thought we could use advanced algorithms to listen to all the programmes in the archive, automatically generate more metadata, use this data to put it all online and then ask our audience to explore it while validating and adding to the metadata.

Using computers To start with, we ran the audio through an automated speech-to-text process. Although nowadays these can be quite high quality, particularly when trained on one person's voice, when applied to the range of recordings, voices and accents that we have in our archive it generated fairly unreadable transcripts. However, we developed robust algorithms that could extract key topics for each programme from these noisy transcripts and we were able to identify topics for all 50,000 programmes in about two weeks for a cost of only a few thousand pounds.

For the extracted topics we use ‘linked data’, tying every topic to an item in DBpedia, a linked database based on Wikipedia. This means that everything in our system is a ‘thing’ with a unique identifier and a link to the web, and not just a potentially ambiguous word. So, for example, we should know whether a programme is about Oasis (the 90s band) or an oasis (the geographical feature).

Once we'd processed all of the archive we had up to twenty unambiguous topics for each programme and we found that the algorithms worked pretty well for programmes about a single issue though they started to struggle with magazine programmes that cover many topics. So these automatically generated programme topics are still not all correct - the computers aren't really listening to and understanding the programmes and don't truly understand what they are about.

And people ... But we thought that these automatically generated topics, together with the original metadata, were good enough to design and build a browsable and searchable website for the archive. We planned to ask our audience to use this online archive and help us by validating the automatically generated data and adding their own - ‘crowdsourcing’ the final part of the problem. Crowd-sourcing is where a group of volunteers are asked to help solve a problem, often presented online. Crowd-sourcing applications on the internet have ranged from spotting things in MPs' expense claims to transcribing old newspapers to classifying new galaxies.

Over the summer of 2012 we built the first prototype of this website. In the prototype (found at http://bit.ly/bbcwsarchive) users can search for and listen to the programmes in the archive and then vote on whether the associated topics are correct or not and add new topics that they think are relevant - we call this ‘tagging’ programmes. The prototype has only been open for a few months with limited publicity but even with a relatively small set of active users nearly 8,500 distinct programmes have been listened to (around 20% of the entire archive) and 4500 distinct programmes have had some tagging activity by people (around 10%). Most of the activity has been voting on topics (there are slightly more votes agreeing with topics than disagreeing) but with a significant number of new topics added.

We have seen two main kinds of user; one set of people who primarily want to listen to programmes in the archive and might tag things whilst they're there, while the other smaller set is people who want to actively help and even see tagging as an enjoyable task in itself. As predicted by a number of studies of crowd-sourcing this latter group have done a disproportionate amount of the tagging, normally around specific topics or particular programmes.

We have also developed technology to identify contributors' voices in programmes. Peoples' voices have unique audio characteristics and we can use this to identify where the same person is speaking within a single programme and even across different programmes. The technology is limited and we can't yet determine the identity of the speaker unless we have a definitive sample of them speaking. But we can ask our users to do tell us who each speaker is, and then propagate that information to all the other programmes where that voice occurred.

Research The aim of our experiment is to find out if this is a practical method of publishing media archives online and to discover the best ways of doing this. We have a number of areas of research that are raising a several interesting questions.

How good do the speech-to-text and topic extraction algorithms need to be? We aim to improve them by feeding the information created by people back into the systems to help the computers learn. So, for instance, if people frequently vote down a topic because it's always irrelevant then the computers can learn not to generate it again. How can we best present this archive online and make things findable when we know we only have noisy and incomplete data? And how do we know when we have reached a level of metadata that is ‘good enough’?

Who will find this archive useful? What will they use it for and how can we make it a better experience for them? So far we have identified several distinct potential audiences including BBC production staff making programmes, academic researchers interested in topics that the World Service has covered and fans and enthusiasts of particular genres or programmes.

What data and information will people contribute and what are the best ways to encourage people to help improve the data? Do they like voting on what is correct? Or would they prefer a wiki-model where the last edit is always the displayed one? We have had requests for editing the programme titles and descriptions where they are obviously wrong or have spelling mistakes and some listeners have even sent us recordings of programmes that were missing from our original archive.

Conclusion To recap: the World Service created a massive digitised radio archive with fairly sparse metadata. We set up computers to listen to all of these radio programmes and automatically generate topics for each one. Using this data we put the whole archive online and invited people to browse and listen. While using the website they could validate and add new topics to make the whole thing better for everyone.

Humans and computers on their own can try to solve some of the problems of putting large media archives online but human cataloguing is very expensive and time-consuming and computer-generated information can be error-prone. We believe that the combination of the two can be a more accurate, cheaper and scalable solution.

To explore the BBC World Service archive of radio programmes and help us improve it, sign up to the experiment at http://bit.ly/bbcwsarchive

Tristan Ferne and Mark Flashman BBC Research & Development